Why you need a Zero Trust Architecture (and how to get one)

Shifting from “trust but verify” to simply “never trust, always verify” is the only safe path. Here’s why, plus, a step-by-step plan to get it done.

Introduction

One of my customers contacted me to help analyze a recent data breach. The gist of the breach is much like so many others that we hear about: a bad actor gained access to systems, was able to penetrate deeper into the network and ultimately stole information. Over 100 million customers had their account data — both financial and personal information — stolen.

In the months following the event a small army of security professionals analyzed every aspect of the breach.

The conclusion of this analysis was very interesting.

It concluded that existing security practices were “typical” of the current state of the industry and, furthermore, that “reasonable and traditional” security measures had been widely deployed. In other words, while the security methods used may not have been state of the art, they were “good.” That’s what’s so interesting to me. Security can no longer rely on traditional castle-and-moat design.

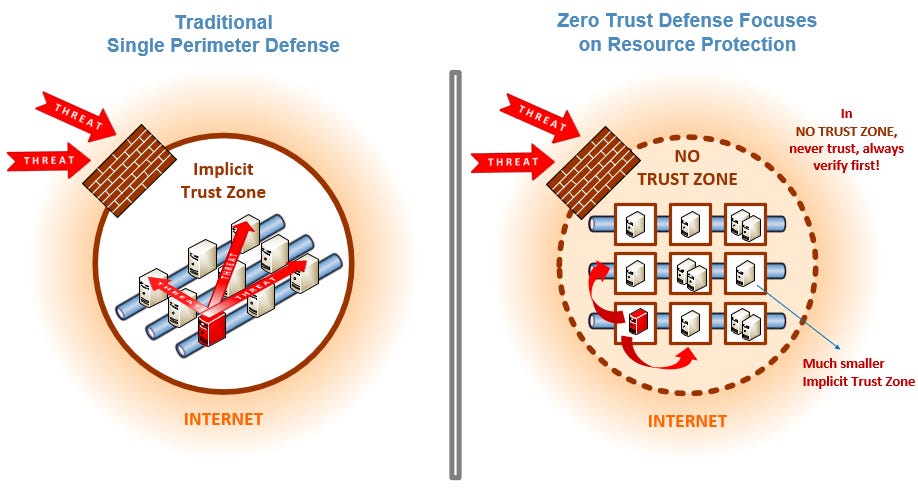

The perimeter has dissolved, and threats now lurk everywhere — both inside and outside your network. This is where Zero Trust Architecture (ZTA) comes in. ZTA transforms security from “trust but verify” to simply “never trust, always verify.”

The cost of trust-based security

In 2020, SolarWinds experienced a sophisticated supply chain attack that had far-reaching impact. Its attackers gained access to SolarWinds' systems, inserting malicious code into software updates that were then distributed to thousands of customers. Once inside networks, attackers moved laterally, compromising government agencies and major corporations.1

Why was the attack so effective? Because traditional security models rely on the assumption of trust: once inside the network, users and devices are trusted — and too many security systems still rely on this model of assumed trust.

As a fascinating report from NPR detailed, this trust-based policy led to almost a year of nefarious access to companies including Microsoft, Intel, Cisco and government agencies including the Treasury and the Pentagon. In fact, the breach might still be going on if the attackers hadn’t made the mistake of poking around FireEye — a security analysis firm that noticed their activity.2

In its analysis of the SolarWinds incident, Deloitte emphasized the initial attack vector was, “[likely] manipulated accounts and deconstructed log files” and that it “leveraged trusted code injection points.” These are trust-based vectors, which explains why Deloitte recommended adopting a zero trust mindset and pioneering security by design. They advocated embedding it right into the entire software design lifecycle and, “assume a breach is possible, verify every user, transaction or request and do not presume trust.”3

If you’re new, welcome to Customer Obsessed Engineering! Every week I publish a new article, direct to your mailbox if you’re a subscriber. As a free subscriber you can read about half of every article, plus all of my free articles.

Anytime you'd like to read more, you can upgrade to a paid subscription.

Weak security is never an intended outcome

Nobody sets out to intentionally build weak security. In fact, it’s generally an important topic of discussion early in a project. So why do we end up hearing about security breaches so often?

Unfortunately, those early discussions prioritize product features and deprioritize the background activity and groundwork that creates a safe, secure system. Security is viewed as an “unfortunate cost,” secondary to a prototype, proof of value, the next big feature, and generating revenue. There’s an underlying belief that security can be added later, that it doesn’t need to be handled from the start.

That deprioritization is where things start to go wrong. Here’s an example, one that hopefully illustrates how seemingly innocuous decisions can lead to a bad place.

This startup launched their first product a few years ago. As is often the case with startups, the pressure to generate income was high — which means making tough decisions about what to build and what to delay. Those decisions are well-meaning. “Let’s prove the concept first, we don’t have any customers. We’ll add 2FA and secure our network before we go live.”

But of course, with a proven product that’s seemingly ready, no business wants to delay a launch for months while retrofitting security. A bare minimum is done — and more justifications. “We added the 2FA, that’s good enough for now. We’ll start doing container scanning and code scanning just as soon as we get this next update out the door.”

But that update creates a lot of attention. It lands a big sale with a new partner, but the partner needs, “a few changes to close the deal.” It’s priority number one.

Something else is going on during these early years, too: there’s a talent gap. That prioritization of features over infrastructure is pervasive. It extends down into how the team has been built — who was hired and for what purpose. High value is placed on cranking out product, building the web site, creating the business logic — all of which are, of course, important. But hiring a highly skilled and probably expensive security specialist is always postponed. As with other infrastructure related commitments, the thinking is, “we’ll get to it later.”

Over the course of years, all those decisions to postpone important security work accumulates into massive technical debt — security debt. That debt might still be growing, if something hadn’t happened — a security breach.

Poor security choices around cloud-based infrastructure led to bad actors gaining control of one server. From there, those same actors were able to jump from system to system, ultimately gaining more access with each hop.

After a careful investigation, two things were clear: The development team didn’t have the experience and knowledge needed to adequately protect the platform in today’s market. And even though there was general awareness about the risks, the true level of risk was never well understood.

In the aftermath of the breach, an analysis of the company’s security environment led to the following findings:

Outdated servers and applications had known, exploitable vulnerabilities. For instance, one server was overdue for three years worth of patches and security updates — many critical. Upgrading all of those systems was deemed critical.

Misconfigured infrastructure had opened multiple resources to attack. Closing these security gaps was also deemed critical. Initial scans with Orca Security revealed just shy of 1,000 active security vulnerabilities.

Lack of strong IAM policies led to security issues — failure in compliance or inadvertent actions that compromise security. Implementing a proper IAM policy was deemed critical.

There was virtually no documentation regarding security policies and procedures. Many of the team that had set systems up had subsequently left the company, leaving systems in an unknown state. For example, there were several servers that nobody had access to, that were still running, and nobody knew what they did. Documenting policies and creating runbooks was deemed urgent.

Lack of operational observability and cost controls exposed the company to nasty surprises. This meant, essentially, nobody knew what was running, what it cost, or if something was going wrong. Creating observability was deemed urgent.

In this case, all of these problems had accumulated during years of deprioritized security activity. Once everything was catalogued, the total cost to fix all the issues amounted to over 9 months of solid work. It took nearly 3 months just to close off security gaps and implement enough control to feel the company was no longer exposed to dire security risks.

During that whole time, daily executive updates focused on exposure levels, risks, and had the company metaphorically looking over its shoulder.

This is Zac — Did you know when you refer a friend, it keeps me coming back to write more? It shows you trust and value the work I’m putting in. Plus, you earn free access!

What Is Zero Trust Architecture?

Zero trust flips the script on traditional security approaches. Instead of creating a “perimeter” around your infrastructure, implicitly trusting those inside the perimeter, ZTA creates an inherently secure internal and external environment:

No implicit trust is granted to users or devices based on location (either internal or external).

Every access request is fully authenticated, authorized, and encrypted.

Strict access controls with least-privilege principles are applied. Every role effectively starts from “no access.”

Continuous monitoring and validation of security posture is part of the system.

I’ve written about the value of adopting Zero Trust Architecture in the Delivery Playbook chapters on delivery processes & tools and security & governance. ZTA has now become the best — and likely only viable — approach to security.

Alper Kerman, a security engineer and project manager for the National Cybersecurity Center of Excellence, describes it like this:

You could be working from an enterprise-owned network, a coffee shop, home or anywhere in the world, accessing resources spread across many boundaries, from on-premises to multiple cloud environments. Regardless of your network location, a zero trust approach to cybersecurity will always respond with, “I have zero trust in you! I need to verify you first before I can trust you and grant access to the resource you want.” Hence, “never trust, always verify” — for every access request!4

That mandatory verification step is the key. Every access request is thoroughly evaluated, dynamically and in real time, against access policies, up-to-date credentials, and specific device and service priveleges. Observable behavior and environmental conditions are also considered, bringing active threat monitoring and response into the equation.

This more granular approach to security gives us a lot of benefits.

Reduced breach impact. When Colonial Pipeline fell victim to ransomware in 2021, attackers accessed the network through a compromised VPN account. The account appeared to be inactive, and had no multifactor authentication. With proper zero trust controls, this single compromised credential wouldn't have provided the broad network access that ultimately led to shutting down fuel delivery across the Eastern United States.5

Improved visibility. Likewise in this example, more stringent observability would likely have led to the account being frozen or disabled long before it was exploited. Zero trust principles lead to rapid detection of unusual behavior and non-compliance events. Active threat detection will often counter threats quickly, limiting damage.

Simplified user experience. Contrary to what you might expect, zero trust can actually improve user experience. When Google implemented their BeyondCorp zero trust initiative, employees gained the ability to work securely from any network without using a VPN. Security improved while friction decreased.6

Better trust and work security. When the pandemic forced organizations to go remote, those with zero trust foundations adapted more quickly and securely. It was easier for them to create a trusted, widely distributed network. Forrester Research also found that online adults in the US (22%), Spain (33%), Italy (32%), and Singapore (26%) said they would stop doing business with a company permanently if they heard about a breach exposing personal information.7

The risk of clinging to old models

Not implementing ZTA comes with very substantial risks — any of which can quickly turn into financial, legal, and regulatory nightmares.

Lateral movement. Once attackers breach your perimeter, traditional models allow them to move freely across your network. In the 2021 Microsoft Exchange Server attacks, attackers exploited this exact vulnerability.8

Insider threats. Without continuous verification, malicious insiders have far more opportunity to cause damage. The Tesla sabotage case of 2018 highlights how trusted employees can abuse their privileged access, potentially reaching into parts of an organization they shouldn’t.9

Cloud vulnerability. Traditional security models weren't designed for today's cloud environments. Despite operating state-of-the-art cloud infrastructure, the 2019 Capital One data breach exploited a cascade of control failures, any one of which could have prevented the attack. It cost the company $80 million in regulatory fines alone.10

Supply chain attacks: As the SolarWinds case proved, when you implicitly trust your vendors and their software, you inherit all their security vulnerabilities.

If you use the referral button below, you’ll earn free premium access to Customer Obsessed Engineering. Just three referrals will earn a free month!

Starting your zero trust journey

Zero trust isn't implemented overnight — it's a strategic journey that requires careful planning and incremental implementation. The best approach is to think of good security policy — ZTA policy — as having a small, amortized cost that’s built into any system. It’s not a “one and done” job, it’s ongoing, an intrinsic part of software development.

Starting from scratch on a greenfield project may be “easy mode,” relatively speaking, since you aren’t facing a vast remediation project. But upgrading an existing system to ZTA doesn’t need to be overwhelming. In both kinds of projects, ZTA represents a lot of work. Be methodical and adopt a long view. Think about starting small, from a prioritized set of objectives, and scaling.

Also, recognize that good security is a team effort. Everyone needs to be aware of security standards, compliance requirements, and best practices. The entire team needs to be “on board,” understand the potential risks to taking shortcuts or making misinformed decisions.

It’s not just about implementing technology, there are organizational changes too. Recognizing the complexity and criticality of good security is step number one. That means adopting reasonable security and technology training programs — for everyone. All employees should be aware of what good security is. Changes in policy and practice will be necessary. Your development team needs the specialized skills build it.

Creating your Zero Trust Architecture

The rest of this article presents a Zero Trust Architecture plan. The plan starts with awareness planning, exploring exactly what you need to secure and why. Once you have a map of your data and security needs, it progresses into architectural development and scaling — starting small, and expanding. From there, each major component of ZTA architecture is addressed, such as IAM (Identity and Access Management), device security, monitoring, technical controls and organizational planning.

Each phase is represented in the following Zero Trust Architecture Lifecycle diagram. Keep in mind ZTA is iterative and continuous. The diagram represents this by modeling its iterative nature once the planning phase is finished.